Last month London Business School hosted the Tax Bootcamp 2024, led by Marcel Olbert (Assistant Professor of Accounting at London Business School) and Rebecca Lester (Associate Professor of Accounting and Senior Fellow at Stanford Institute for Economic Policy Research), and supported by the Wheeler Institute. Building on the 2023 Global Tax Conference at LBS, the bootcamp focussed on three key objectives:

- To understand and discuss the challenges and opportunities around the digital and AI-based transformation of businesses, and the implications for business taxation.

- To assess the current state of tax and related policy tools that aim to mitigate climate change, building on insights from academic research and practitioners’ experiences.

- To bring together leading scholars, practitioners, and policymakers in a small and intimate setting to establish sustainable relationships and future collaborations.

The bootcamp officially began by addressing some of the most pressing and talked about issues of the decade – AI, technological revolution, and tax across different geographies and regions, including developing countries. This article is the first of a three-part series. Find out more about parts two and three of the Bootcamp now: ‘Taxes for Climate Action Across the World‘; ‘New Research on Taxes and Transparency Regulation for Sustainability‘.

Leveraging AI and the Tax Functions of the Future: Abhijay Jain (AI Tax Leader at PwC UK)

‘What does AI mean for tax practitioners?’ Abhijay Jain asked, as he opened the discussion on AI and tax from the practitioner perspective. ‘The concept of AI has existed for over 70 years,’ he reminded listeners, ‘and dates back to the computers used to break codes during the Second World War. Machine learning is not new – it has been used by tax authorities to spot inconsistencies in records for years. Generative AI has simply introduced an unprecedented speed and scale to machine learning, both in terms of technological development and industry engagement. After all, it took Netflix nearly three and a half years to reach one million users; it took Chat GPT only five days. The challenge then is for tax professionals to keep up.’

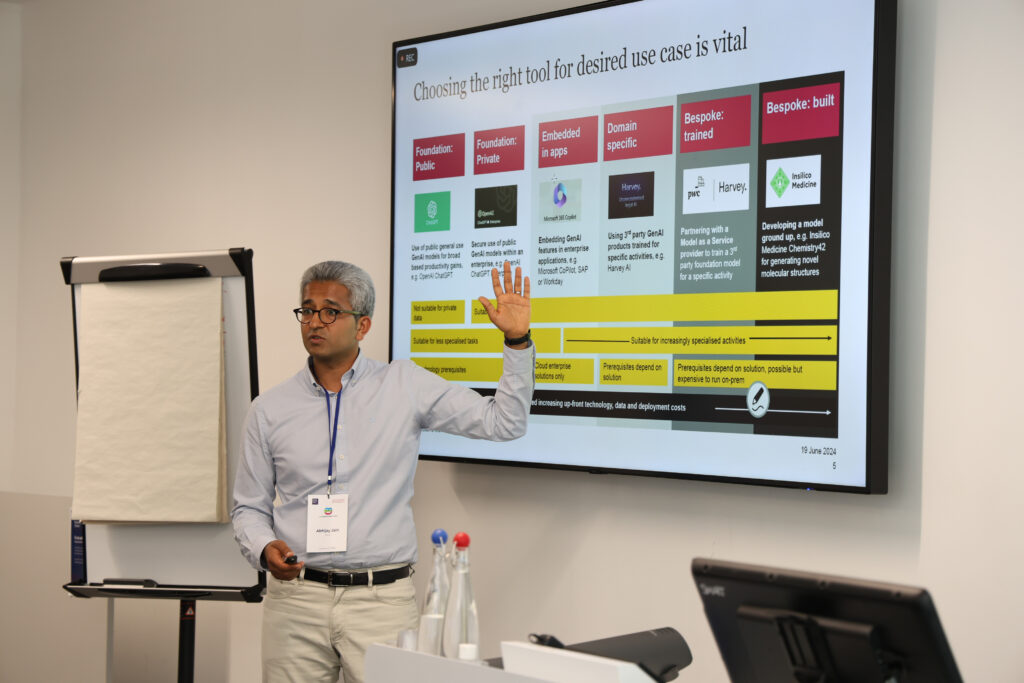

Jain emphasised that choosing the right tool for the desired use case is vital. For instance, public general use GenAI models such as OpenAI and ChatGPT are suitable for broad based productivity gains. But bespoke AI models are necessary to provide clients with bespoke solutions. Bespoke models can be grouped into two broad categories: purpose-trained and purpose-built. The former entails partnering with a model as a service provider to train a third party foundation model for a specific activity – PwC’s strategic alliance with AI startup Harvey is an example of this, whereby PwC’s Tax & Legal Services (TLS) professionals use Harvey’s models to train client-specific AI. But sometimes a model has to be developed from the ground up, such as in the case of Insilico Medicine, the AI pharmaceutical solutions company that has developed models for generating novel molecular structures. ‘Remember,’ he argued, ‘finite use case patterns offer infinite possibilities, i.e. models broadly help to streamline: summarisation; technical research; data extraction; data analytics; mass document uploads; general productivity.’ In most organisations, tax is not a function with unlimited resources or budget. It primarily serves as a risk management function – you want it there to make sure nothing goes wrong and to help the top line and protect the bottom line. AI allows you to do both in ways you haven’t done before, which is one of the many reasons why it is vital to invest in tax and AI.

He went on to stress that there are some issues with Large Language Models (LLMs) for tax professionals, including misinformation and inaccuracy, systematic bias, static data and many more. But the main barriers to AI and tax today include trust and a narrow focus on GenAI rather than AI more widely. Barriers in the future will range from access to quality data and knowledge to upskilling the next generation – we must remember that training and education need to adapt.

There will be some nock on changes that will help drive the adoption. As tech changes, tax authorities will need to react – when they start using AI to ensure compliance is being done, tax payers subsequently react. And so this relationship starts a bit of an ‘arms race’ between both sides to find out whose AI is better and leads to societal changes and a range of key questions emerge. What if there are mass layoffs or more leisure time due to AI – in that future, where is the tax coming from? Do we need to start collecting tax in a different way; do we need to tax the AI? Do we offer new incentives to ensure that certain industries are protected?

He concluded with a quote from Canadian Prime Minister Justin Trudeau, ‘The pace of change has never been this fast, yet it will never be this slow again’.

AI Use by Tax Stakeholders and Traceability of AI Legal Reasoning: Holger Maier (Managing Managing Director at FGS Digital GmbH)

Holger Maier took to the stage to discuss AI and tax law best practice, focussing on transparency with AI decision-making processes from a legal perspective. FGS is one of Germany’s leading commercial law firms. They are a medium-sized company, and therefore cannot afford to train their own AI models, so GenAI is important to them.

Maier opened by addressing the benefits and challenges associated with AI and tax law. On the one hand, AI can lead to increased efficiencies, improved accuracy, enhanced decision-making, faster processing times, and cost savings. On the other, it could compound a lack of transparency, and there is broad potential for bias, plus a range of liability, data privacy and security concerns.

‘So,’ he proposed, ‘we need AI systems that are designed to provide clear explanations of decision-making processes and outputs – we need ‘Explainable AI’ (XAI).’ This transparency is important for regulatory compliance and accountability, building trust with stakeholders, mitigating legal and ethical risks, and conforming to AU’s AI Act as well as broader ethical-judicial demands as they continue to develop.

How does XAI work? XAI involves enhancing legal AI with different explainability methods to ensure transparency in AI-driven decisions. These include: user friendly explanation generation; knowledge-augmented prompting; sample-based explanation; and a range of attribution methods. Maier then explained the Retrieval-Augmented Generation (RAG) Workflow, which charts a course to build a whole AI framework for a company, eg the FGS Legal CoPilot.

The FGS Legal CoPilot is a model used by lawyers. Maier demonstrated how it can be used in contract development, working through an example in which the XAI model generated a contract according to specific circumstances based on past models and sources. The tech has streamlined the standard process by at least 50-60%. There is no blackboxing – the AI-generated document is used only as a starting point that will be reviewed and finalised, and all computational decision-making processes are transparent and traceable.

XAI models can also be used to enhance customer experience. For instance, the FGS Tax & Finance Platform allows clients to resolve queries on their own using the Pillar2Pro chatbot. This is an XAI trained on national legislation that helps answer client questions. The chatbot is briefed on tone to convey the company’s ethos and simulate personability – Pillar2Pro, for example, is briefed to be ‘kind of funny’.

Research Opportunities on AI and Corporate Decision Making: Eve Labro (Professor of Accounting at UNC Chapel Hill)

Professor Labro opened her talk with a series of questions. From a broader accounting perspective, what can we work on within these gigantic developments in AI? What is the landscape and what are firms doing? What kind of decisions or processes can be supported or facilitated by AI? What is the impact of AI usage on firm profitability and risk?

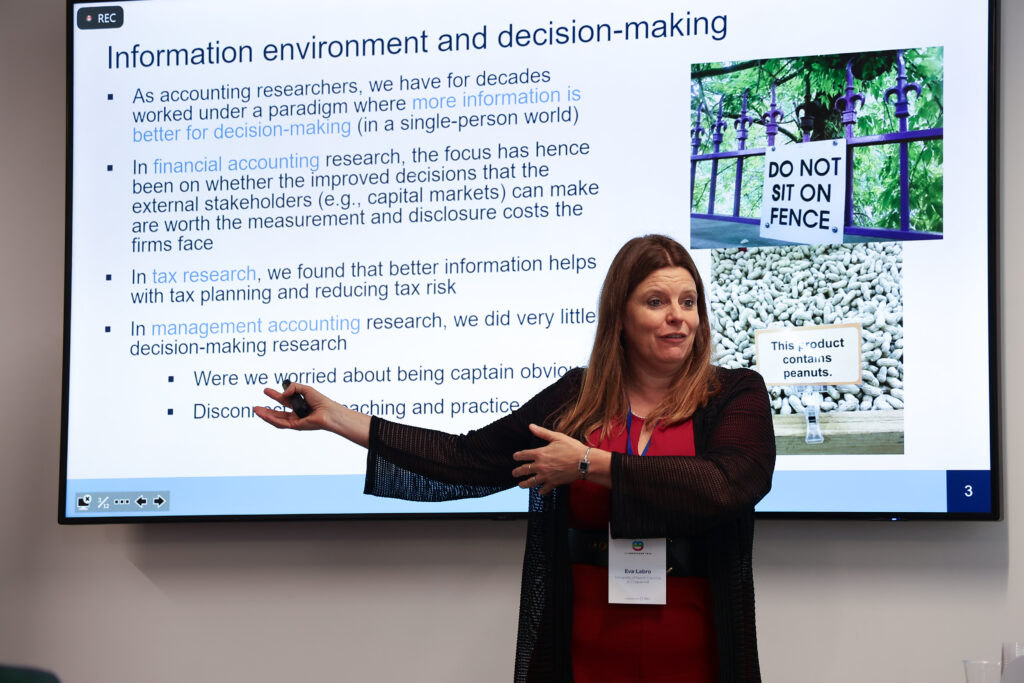

AI represents the most fundamental shift in the information environment we have seen in decades and allows us to revisit a lot of decision-making problems. For decades we have thought that more information is better for decision-making. This has driven research agendas in many subfields. In financial accounting it has been on if the improved decisions that external stakeholders can make are worth the measurement and disclosure costs the firms face. In tax research we found that better information – not necessarily more information – helps with tax planning and reducing tax risk.

‘We need to consider how AI might improve and also worsen decision making.’ Professor Labro argued. AI improves decision making because it gives us faster access to a greater volume of information. More complicated models account for a greater number of factors, so they readily lubricate information flows between internal parties. But it might worsen decision making in the sense that AI can draw on data gathered for purposes other than the specific purpose that relates to the decision, meaning that relevant information can be ‘drowned out’. Moreover, we might ‘overfit’ models, and it is difficult to communicate the credibility of soft, tacit knowledge to models that are supported by hard data. Gallo, Labro, Omartian (WP, 2023) found that plants with high data intensity make less accurate forecasts of TVS growth than plants with less – albeit more relevant – data. These more data intense models are less accurate because they incorporate less information about the local economy, since they are designed to draw on information available at wider levels.

Q&A

‘How are you thinking about training and jobs – particularly entry level jobs – becoming obsolete?’

Abhijay Jain replied by asking his own question, ‘Where are the gaps that will emerge from a skills perspective?’ and arguing that ‘those are what we need to teach now, rather than stop employing new people. We need to upskill in new ways, for instance in review skills. There are implications for the education system, which must adapt, and right now there is a question mark over what universities need to teach people to prepare them for employment. One school of thought is that the technology will develop to the point of self-review, so review skills become obsolete. You therefore teach people the implications and what the role of workers will become. Another is that AI technology at its heart is a predictive mathematical model, so we may be able to use that to teach people how to support other things.’

About the organisers

Marcel Olbert is an Assistant Professor of Accounting at London Business School. Named as one of the 2024 Poets & Quants Best 40-Under-40 MBA Professors, his research interests focus on the real effects of corporate taxation and disclosure regulation – examining how multinational businesses respond to incentives that stem from their regulatory and macroeconomic environment. His broad range of experience includes investment banking within the M&A advisory group of JP Morgan London, strategy consulting with Roland Berger and international tax and private equity with PwC and Flick Gocke Schaumburg. Marcel also serves as an Associate Editor at the European Accounting Review. His work has been accepted for publication in The Accounting Review, the Journal of Accounting and Economics, the Journal of Accounting Research, and the Review of Financial Studies. His current research on tax reforms and multinational firm investment as well as on carbon taxes and carbon leakage in developing countries is featured by the Wheeler Institute for Business and Development. Marcel is seeking applications for professional research assistantships.

Rebecca Lester is an Associate Professor of Accounting and one of three inaugural Botha-Chan Faculty Scholars at Stanford Graduate School of Business. She is also a Research Fellow at the Stanford Institute for Economic Policy Research (SIEPR) and at the Hoover Institution. Her research studies how tax policies affect corporate investment and employment decisions. In particular, she examines the role of reporting incentives, disclosure regimes, and information frictions in facilitating or altering the effectiveness of cross-border, federal, and local tax incentives. Her recent work explores national-level tax benefits, such as U.S. manufacturing tax incentives, European intellectual property tax regimes, and tax loss offsets. Other work examines state, local, and neighborhood-level incentives, including firm-specific tax incentives and the recent Opportunity Zones tax incentive. Professor Lester received her PhD in accounting from the MIT Sloan School of Management, and she has a BA and a Masters of Accountancy from the University of Tennessee. Prior to her studies at MIT, she worked for eight years at Deloitte in Chicago, including five years in the M&A Transaction Services practice.